Face Recognition in Business — Use Cases and Limitations

This article will give you an overview of what face classification is, how it works, what are it’s use cases and limitations.

Want to know more about how to use face classification in your business? Jump straight to Face Recognition in business (part 2) — Possibilities.

Face Recognition, the early days

Let’s begin by noting that face detection and recognition tasks aren’t as new as you might imagine — they’ve actually been around for quite some time. Face detection is the process of recognizing a face’s position in a given image, if one or multiple faces are present. Face recognition is the next step in the process and involves identifying whose face has been recognized.

For example, back when most photos were taken with small cameras instead of smartphones, a face detection (sometimes called “smile detection”) feature already existed. Face detection was used to adjust autofocus and clearly capture the faces in the frame.

In the world of data science, an extremely robust solution for face detection was developed by Paul Viola and Michael Jones in 2001. It works very quickly and detects most faces, but makes the same mistake that people do: sometimes it sees faces in inanimate objects, and so produces quite a few false positive results.

As solutions based on the Viola-Jones algorithm weren’t good enough for industries to adopt, they had to be built on. Techniques for face detection and recognition have improved since, especially with the introduction of convolutional neural networks (CNNs), which provided an important boost to image processing tasks. In some challenges, the application of CNNs has even outperformed humans.

Face Recognition today

The evolution of face detection and recognition algorithms alongside various kinds of optimizations developed by Google, Apple, Facebook, Snapchat, and others, has led to the possibility of running such algorithms with great speed and efficiency.

Faces can be detected in a video in real time. Face recognition can be performed with a human-like accuracy on the Labeled Faces in the Wilddataset. Both tasks are possible using modern mobile devices, though some accuracy tradeoffs may need to be made for the sake of speed. Let’s take a closer look at several real-world applications you’ve most likely heard of or tried.

Face authentication

Nowadays, face recognition is considered to be as secure as fingerprint scanning on mobile phones. Apple, for example, has implemented additional depth sensors to improve security in their products. OnePlus’s face recognition exclusively uses camera sensors. In cases like these, when an algorithm doesn’t recognize a face with enough certainty, it’s safer to say a face hasn’t been detected than to provide access to the mobile device. Especially considering that face recognition is a machine learning issue, which will only improve over time — and with additional training.

In my experience, I’ve found that Face Unlock doesn’t always work, though when it does, it’s accurate and lightning-fast. OnePlus was able to recognize my face in poor lighting conditions, with sunglasses on, and even from different angles. It amazes me how phone manufacturers optimize face recognition algorithms to make processing happen in a split second.

Tagging friends

Another popular example of face detection is used when tagging friends on Facebook. Have you noticed how Facebook automatically draws a demarcating box around a face when you point your cursor to it? Or how once someone has been tagged, you can point the cursor to their face and see their name on the picture? Nowadays Facebook is also capable of automatically tagging your friends on a photo you’ve just uploaded.

Though Facebook doesn’t disclose which algorithms they use to locate faces in an image, there’s a good chance the Histograms of Oriented Gradients (HOG) method is involved. HOGs essentially transform the original image’s pixels using arrows (or gradients) that point toward the areas where these pixels become darker. This makes it possible to avoid taking into account the light conditions represented by each pixel value. But replacing every pixel in an image with arrows would be overly computationally heavy, so the idea is to capture only the general direction of the image’s changes from light to darkness. Pixels are grouped into squares of 16 x 16, for example, and each square is then replaced with an arrow. The task then becomes finding the area most similar to a known HOG pattern. HOGs can be trained for different purposes, but in this case we’re discussing face patterns, which have been extracted from a large number of training faces. Though this technique requires more resources than the Viola-Jones algorithm, its results are more accurate and the amount of false positives it generates is significantly lower.

Smartphone camera AI

Face and object detection are now widely used in smartphone cameras. By detecting certain objects or scenes, camera apps can automatically adjust their settings to produce the best possible image. Face detection is used in “portrait modes,” for example, where a person’s face is captured in great detail while the surrounding scene is blurred.

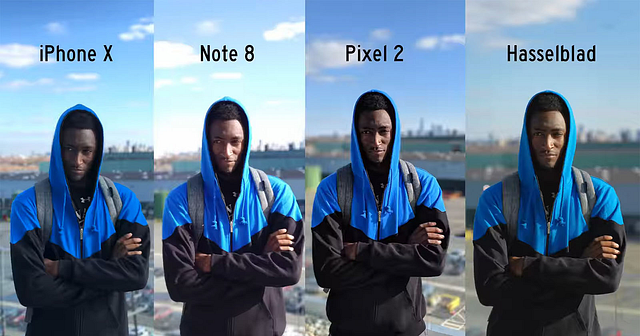

Google seems to have developed the best portrait mode on their Pixel devices — in this example, the contours of the subject’s hair and face are untouched and the background is blurred. Not only has a face been detected, but the exact outline of the subject’s body has also been automatically recognized and delineated.

Face Landmarks and 3D Filters

Snapchat, Facebook, and Instagram offer many filters for smartphone cameras that use face detection functionalities. Face contours, eyes, noses, and mouths are detected, and then effects like dog masks are added on top.

This became possible using the Face Landmark Estimation (FLE) method. The goal of this method is to find and localize (or position within the image) what are referred to as face landmarks — points that exist on every face. These could be the edges of someone’s eyes, for example, or the top of a chin. A machine learning FLE technique called a regression tree ensemble was trained on a vast amount of examples to be able to localize 68 landmarks on any human face. With this method, no matter if your head is turned or tilted, its face landmarks will be detected correctly. Once these have been located, the algorithm knows where your mouth, eyes, nose, and chin are. 3D objects can then be placed on the corresponding points and voilà, we have a new Snapchat filter. Face landmarks are also used in Face Swap applications, when one face is extracted and placed on another’s.

Surveillance

One of the most popular applications of this method, of course, is surveillance. In general, humans aren’t very well suited to monitoring surveillance footage from multiple cameras around the clock. This type of work can be optimized. For example, if a camera is placed so as to correctly capture the faces of people passing by it, face recognition can be applied to its footage. Human operators can then be notified when the computer can’t recognize a face or when one that’s worthy of attention has been recognized.

The Dulles Airport, for example, recently implemented a face recognition algorithm to optimize boarding time on planes. The algorithm is used to check faces against a set of databases, verifying passengers’ identities and authorizations for boarding. The system is said to be 99% accurate.

China takes face recognition even more seriously. The country uses it to analyze public surveillance footage and recognize suspicious or criminal individuals. Once cameras recognize a face that authorities are searching for, the individual’s location is sent to the police, who can then react to the situation in person.

Limitations

The face detection and recognition applications discussed above do have some limitations.

In case of China, a dense network of CCTV cameras is required for the system to work. Footage must be constantly monitored by powerful computers for alarm notifications to be triggered. The system has to be regularly updated to properly recognize new faces. In order to save computational power, however, algorithms should only be trained to recognize people of interest. Other citizens could then be classified as “unrecognized,” as they wouldn’t specifically be sought after.

As this system is used in China, a vast dataset had to be collected to understand the features that determine similarities and differences between faces specific to that region of the world. But as we’ve seen with Apple, a system trained mostly on European faces doesn’t work well in Asia. The same is applicable for a network trained on Chinese faces — it wouldn’t work well in Europe.

In case of Google or Facebook, data and computational resources sourced from billions of users make it possible to train extremely intelligent face recognition models. Though systems like these can outperform humans and have very low error rates, small issues still become noticeable for thousands, if not millions, of people when dealing with a user base of that size. A case of false rejection here wouldn’t be too serious — a user would be asked to try again until their face were recognized or until they gave up. If it were a case of false positive recognition, however, a stranger could gain access to another’s personal data, which would very quickly become a critical issue. So face recognition is still vulnerable to a number of potential problems.

In this article, we explored what face classification is, how it works, what are its use cases and limitations. If you want to know more about how to use face classification in your business, see Face Recognition in business (part 2) — Possibilities.

MOONCASCADE

Mooncascade is a Machine Learning & Product Development company focused on building new disruptive solutions with real business and market impact. With a team of nearly 100 specialists, we are chosen product development partner for all regional telecommunication companies, work closely with financial companies, and are often brought in when large industries require an agile and experienced product development partner.

If you happen to be interested in using face classification in your product or