“Thou Shalt Do No Harm”: A Journey Into The Realm of Value-Sensitive Design

Working in technology can sometimes feel a bit like Hogwarts. Think about it: you have a broad selection of magical tools (as well as some helpful Rons and Hermiones working beside you) to create real-life “spells”, a.k.a. products that affect how people work, relax, or just live in general.

Often, this power brings out the best in people. “Our product will improve the world!” “We’re making this world a better place!” The road leading to Facebook, Google, Microsoft and other tech companies is paved with similarly altruistic mission statements.

As well they should – after all, technology has changed our lives for the better in so many ways. From groundbreaking inventions like the internet or smartphones, to industry-disruptive services that have sped up or simplified the way we do things once and for all: Netflix and Spotify changing the way we consume media, Uber and Bolt changing the way we get from one place to another, Apple Pay and Google Wallet changing the way we pay for things… The list goes on and on.

But all magic comes with a price. While high-speed hits like Canva and Stripe have drawn more investors to the technology sector than ever before, they’ve also (inadvertently) created an unrealistic recipe for success: massive amounts of product development work, coupled with an extravagant marketing budget, is supposed to make any new tech product go viral in the first 6 months, bringing instantaneous growth and sky-high ROI for investors! Hooray!

…Except that if this doesn’t happen – and let’s be frank: most times, even with the best of intentions, it doesn’t – the pressure on everyone involved in that product increases significantly. We’ve all seen tech companies gloat about their achievements, and how it took such a small team of technologists to reach such heights – but the truth? Growing and scaling at such an abnormal pace puts an incredible load on that small team’s shoulders.

Which, in turn, leads to increased uncertainty and mistakes within the team itself, mistakes that often have far-reaching consequences. And we don’t just mean those that come back to bite the companies themselves (remember the ill-fated product experiment that was the Windows Phone?), but consequences for the users themselves. Like when hoverboards first caught on: so many companies tried to jump on that trend that they all committed the same product design flaw, which resulted in the many, many flaming hoverboard videos (and injuries) we can watch on YouTube today.

ETHICS AND TECHNOLOGY: A MURKY BUSINESS

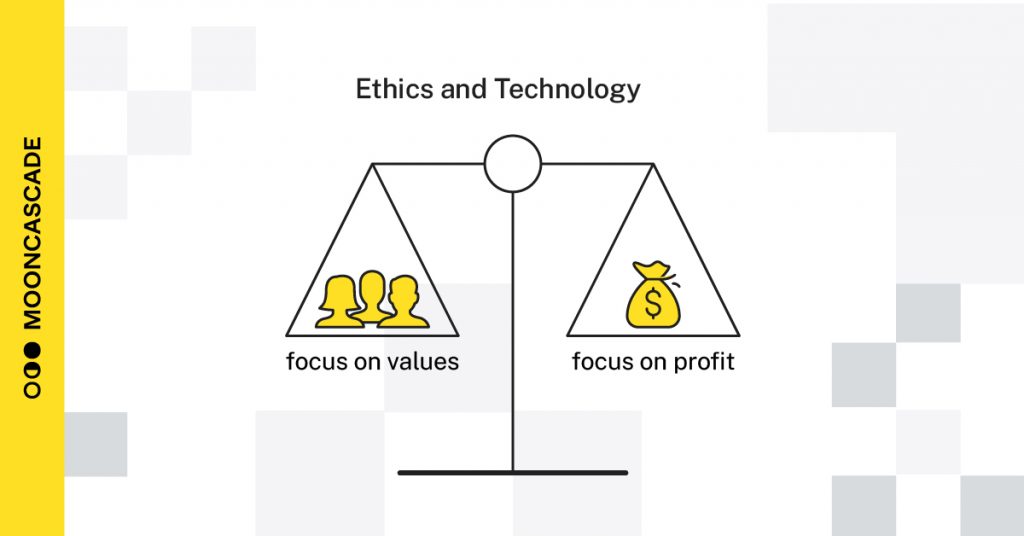

So how to safeguard against such heated happenings? More and more of us technologists – and by technologists, we mean any people (or companies) owning, building or involved in technological products – believe that a radical shift of focus is step one. Instead of full-on, growth-centric design that aims to scale (and produce profit) as soon as possible, we need a more humane approach. Something that aligns more with the values of us as human beings, and less with the bottom line.

But such a change doesn’t happen overnight, especially in the historically rule-averse technology sector, with its absence of ethical codes (the same ones that medical, legal or media companies all have to keep in mind!).

Of course, the point of these codes is to provide support and guidance in times of turmoil or when difficult decisions have to be made. And in the world of technology, their absence is felt more keenly with every passing week.

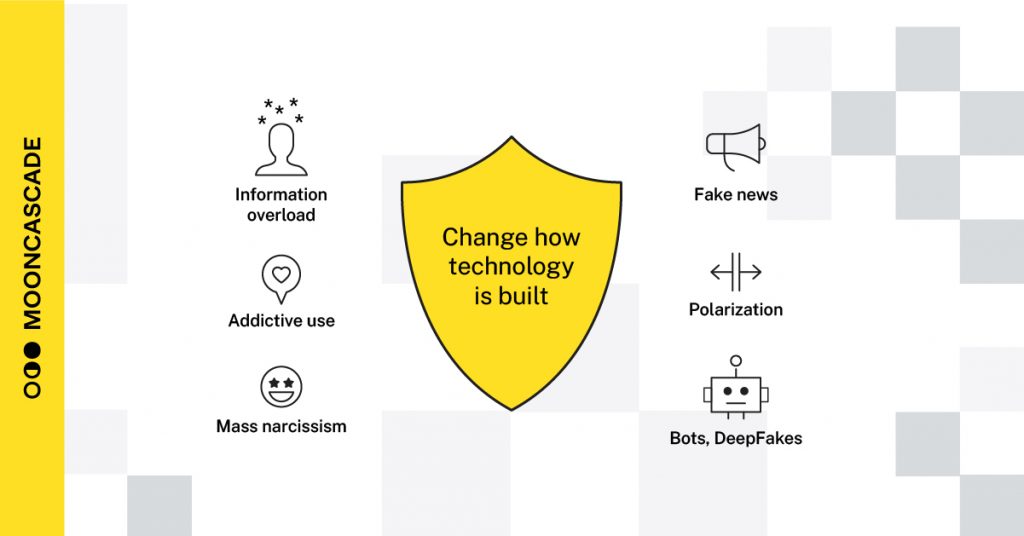

Just think of the many unintended side effects of this lawlessness we keep hearing about in the news. Social media is, naturally, the most obvious example: 12% of Facebook users have reported it as affecting their sleeping habits, work life and/or relationships in an internal survey, while whistleblower Frances Haugen famously reported that Facebook was aware of (and doing nothing about) the fact that 30% of Instagram users felt their use of the app affected their body image negatively. Not to mention the increasing frequency of social media addiction and its many shared traits with substance abuse; definitely an area in urgent need of attention!

But these side effects reach far beyond the borders of Mark Zuckerberg’s realm. Tech companies’ obsession with keeping their users engaged on their platforms or devices has already caused a whole host of physical and mental health issues: from fragmented attention in children (and adults) who get too much screen time, or “filter bubbles” that divide people into ideological groups according to their assumed beliefs, to “texting thumb”, “phone neck hump” or even obesity – all that “Netflix and chill”-ing is definitely the shortcut towards us becoming like the humans in WALL-E’s spaceship, after all!

Remember the world-bettering intentions we mentioned earlier? Maybe, instead of striving to make the world a better place, the smarter thing for tech companies starting out would be to adopt a “Do No Harm” mentality instead…

A VALUABLE SILVER LINING

It’s not all bad news.

Since the introduction of GDPR in the EU and COPPA in the US, people have finally become more mindful of their online presence and the far-reaching effects technology can have on their lives, at least. (The data privacy of children, in particular, seems to be an area where regulations are slowly but surely being finalized around the world.)

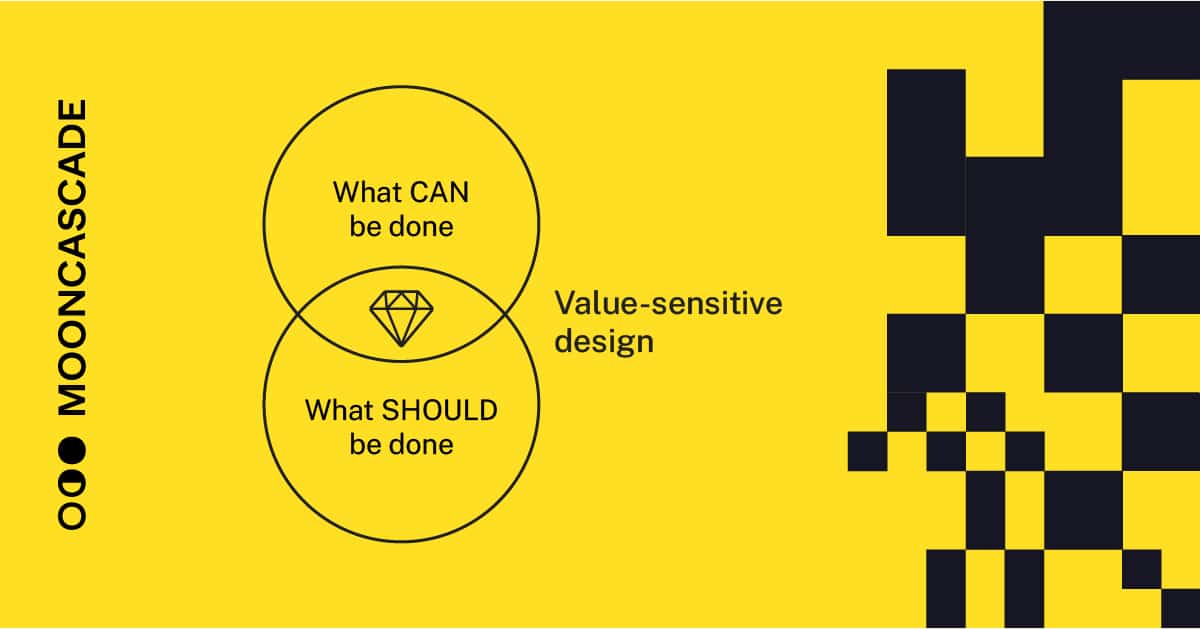

Communities like the Center for Humane Technology have started educating people and businesses of the steps they can take to ensure a more empathetic approach to building digital products; and ethical design pioneers like B. Friedman and P. Khan have formalized a truly value-driven approach towards product development, actually coining the phrase Value Sensitive Design – an iterative process that predicates itself on principles as well as P&L.

And changes can be felt even within the technological landscape itself! Whether we’re talking mindfulness apps, fitness trackers that regularly prompt us to move, the new Be Real social media app that only lets users in once a day… Or more subtle changes like restricting “share” buttons to make sure you’ve really read/watched the content first (or streaming services asking if you’re sure you want to continue binge-watching Game of Thrones after the last episode?), one thing’s certain: the tide is turning. More and more people are moving away from technology that causes them more pain than pleasure.

So the question is: what can we do to help bring in that tide faster?

As technologists, with or without company buy-in, we have a social responsibility to promote humane technology with whatever tools we have – from value-sensitive design to, literally, anything that works! Raising awareness of the many issues caused by technology gone wild in our lives; analyzing their impact and the resulting ethical quandaries; implementing the value-sensitive design process in our daily work, and sharing our experiences of it with our coworkers… It might sound like a mouthful, but starting small is an easy way to get going – like designing features with solutions and sensitivities in mind instead of just growth and engagement, for example. Even the longest journeys started with a single step, after all!

WANT TO FIND OUT MORE?

Then you’re in luck: over the next 6 months, we’ll take a deep-dive into the ethics of technology today, the humane approach to digital product development and the many ways you yourself can bring values to the center of your work in tech.

So remember to check back soon – or, if you can’t wait that long, just drop us a line and we’ll get back to you with some ideas as soon as we can. We’re all wizards here, after all – the least we can do is help keep our magic safe together!