Leading the way towards a sustainable user-product ecosystem: Human Sensitivities in Design

What’s humane design? And why should we care?

Remember when COVID-19 was first spreading across the world? In the days leading up to nationwide lockdowns, people everywhere – us included! – reached for their phones and scrolled tirelessly through hundreds of headlines, eager for the latest news on The Big Bad Virus. However, most of us didn’t feel calmer afterward.

Instead, we went into panic mode.

When it comes to technology, the tension between what we CAN use it for and what we SHOULD use it for has become a constant battle of opposing forces – kind of like the Jedi and the Sith in the Star Wars universe.

When it comes to our own little galaxy though, we believe technology’s ultimate goal should be to help people become the best version of themselves.

In more practical terms, this means that technology should empower people to make their own decisions and be creative, as well as supporting their wellbeing and helping to connect with each other in a healthy way.

Tall order? True, but like all massive undertakings, all we need is to take the first step (and the next one, and the next one) towards our destination, and in ethical product development, this first step is relatively simple: we need to understand if our technological solutions (or features of our solutions) inhibit our human sensitivities.

Wait, what are human sensitivities?

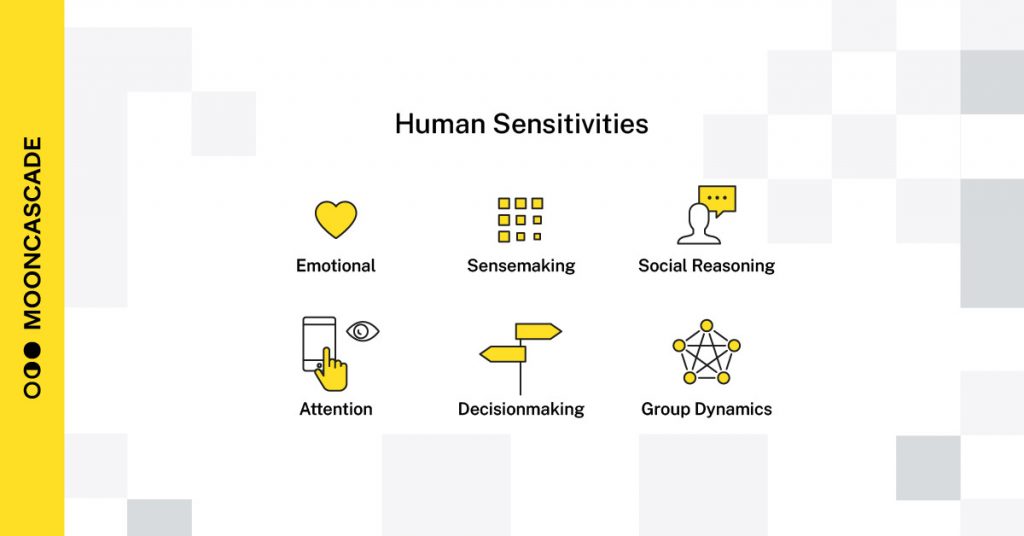

Let’s start with the basics: according to the Center for Humane Technology, Human Sensitivities are “instincts that are vulnerable to new technologies”.

In other words, those areas of our characters that are most directly affected by using apps, working on our laptop, checking our wearable devices and so on.

Given that our lives have never been as deeply immersed in technology as they are today, this area of academic research is still relatively new. Luckily, we already have some precedents to turn to.

Human psychology has always had an evolutionary aspect; our minds have changed a lot, not just over the past millennia, but even in the past few centuries! Historically, these changes have enabled us to cope with our evolved environments.

But some of these rewired mechanisms in our brains – while helping us take optimal actions or make decisions in many contemporary situations – can also inhibit us in others.

Coming back to the world of tech, this means that although it usually simplifies our lives, technology can also tap into our human vulnerabilities – exploiting our (otherwise useful and necessary!) biases and fast decision-making skills for, well, business gains.

“Are you paying attention?!”

By now, attention has become one of the most valued resources of our society. Sadly, it’s also increasingly hard to come by, with multitudes of technological products and functionalities (not to mention ads!) competing for your mind’s center stage 24/7. In a way, this is how life has always been: lots of different input to choose from, our brains constantly evaluating what’s worthy of our precious focus…

But today, this bidding war for our attention is so saturated that all those different tech products (plus, of course, increasingly aggressive media tactics) have resorted to using stronger and stronger stimuli to win the grand prize.

And although, ultimately, one of these technologies will capture our attention – an urgent news headline on a phone screen, for example, or a fitness tracker beeping incessantly – it will also inhibit our sense of wellbeing.

A cascade of bad news presented without any stopping cues will easily lead us to doom-scroll on our phones, for example; or to constantly switch context and control our attention externally, whereas adopting a more ethical approach to designing these notifications will encourage us to be more mindful and have internally led attention which gives users their autonomy back.

Don’t forget your emotions (or else)

But our attention span is not the only thing being exploited by technology. Remember those stronger stimuli that we mentioned earlier? Coupled with the all-too-familiar attitude of “each technological product should be keeping users engaged as long as possible”, they also affect other human sensitivities, like our emotions and social reasoning.

All that amplified content consumption (caused by spending too much time in one technological environment, like e.g. an app) often leads to anxiety, stress, sleep deprivation and a distorted view of ourselves and others – when product teams could just as easily nudge users towards more limited content absorption, which would in turn contribute to them feeling more calm, balanced and able to connect more authentically with others, away from their screens.

There are even more sensitivities at play here: like the ability to make sense of information, and make decisions. This is, actually, one of our biggest challenges as humans right now: not because the general level of education, or access to information has fallen (it hasn’t), but because the amount of accessible information surrounding us is higher than ever before.

Which means that making sense of it is becoming harder and harder.

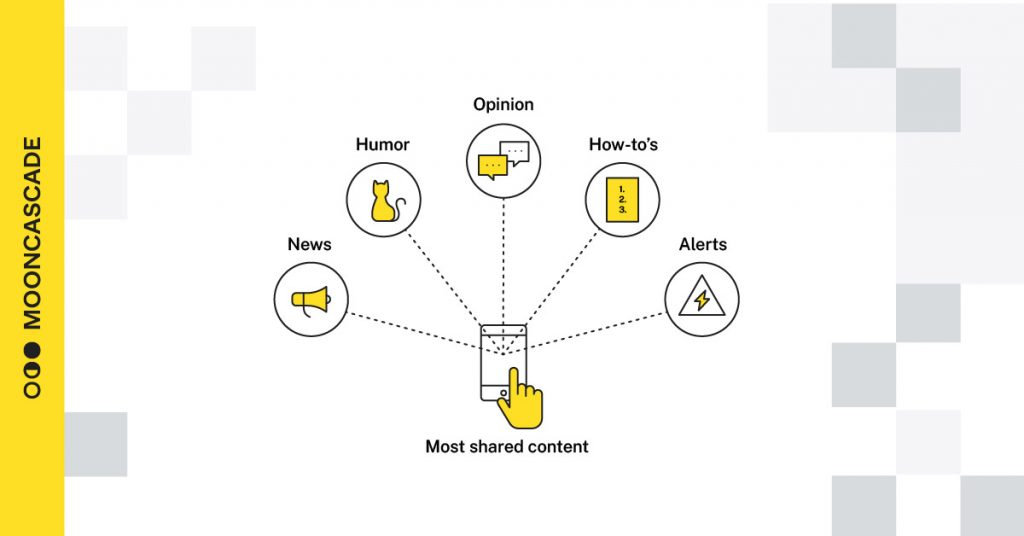

Again, this is connected to the ongoing race for attention and the desire to optimize technological products for maximum screen time: in order to achieve this not-so-ethical goal, businesses are optimizing content for virality, often by taking facts out of context. Oblivious to (or regardless of) its price.

The problem with designing for virality is that, as humans, we have a tendency to conclude that the more we see a certain piece of information, the more likely we think it to be true.

Which makes viral content a powerful tool for spreading both truth and (as we have experienced over the last few years) misinformation – not just on social media platforms, but in “real”, day-to-day life.

And whenever product designers influence people through viral misinformation to take action automatically (i.e. make an unconscious decision about something we’ve seen/read/heard), you know what’s really taking the hit? Their users’ agency and sensemaking skills.

So what happens now?

Establishing the many ways technology can be used for both good and bad is one thing – the question is, what’s the best way to move forward from here? Especially for people involved in creating these products, the ethics involved in designing technology for scale prefaces an acute dilemma: is there a way to ensure a more humane way of doing things? Or is it already a lost cause, padded by businesses’ bottom-lined agenda?

For our part, we believe that there is a more humane way of doing things.

The key? Understanding the users we design our technology for are real-life human beings, and then applying this understanding to practical product discussions.

This will, slowly but surely, enable the technology industry to improve as a whole, and lead to a much more sustainable user-product ecosystem – one in which our human sensitivities are celebrated, not preyed upon.

And the tide has already started to turn.

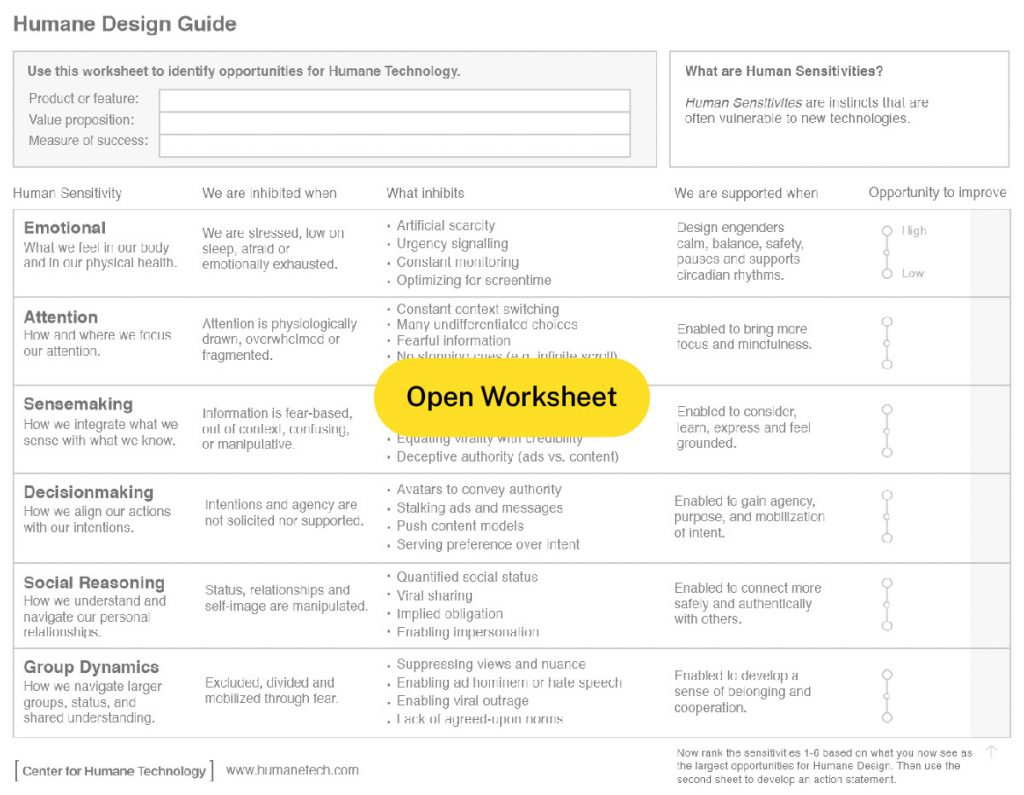

This Humane Design Guide, for example, has collected all sensitivities that might be affected by/when interacting with technology into a worksheet, augmenting it with questions that analyze the many ways technology can inhibit or support us, and what action we can take to improve the system.

It’s a tangible way to introduce humane design and technology’s moral implications in our own environments, which may then kickstart an open discussion within our companies about our own responsibilities in this area.

But we can also help you in this area!

Here at Mooncascade, humane product design is most definitely a priority. And we’re happy to share our accumulated knowledge with you whenever it suits you best, so drop us a line and let’s dive in!